Data Manipulation for Deep Learning

Datasets drive our choices for all the deep learning hyperparameters and peculiarities involved in making accurate predictions. We split the dataset into a training set, test set, and validation set. Our model is trained on the training set, and we choose the hyperparameters (usually by trial and error) on the validation set. We select the hyperparameters that have the highest accuracy on the validation set and then fix our model. The test set is reserved to be used only once at the very end of our pipeline to evaluate our model. Our algorithm is run exactly once on the test set and that accuracy gives us a proper estimate of our model's performance on truly unseen data.

Now that we have created our training set to perform the optimization process, let's look at how manipulating this data can help us train our model.

Data Preprocessing

The loss function is computed on the mini-batch of the training data at every step of the optimization algorithm. Therefore, the loss landscape on which optimization is performed depends directly on the training data. The aim of pre-processing the data is to make it more amenable to efficient training.

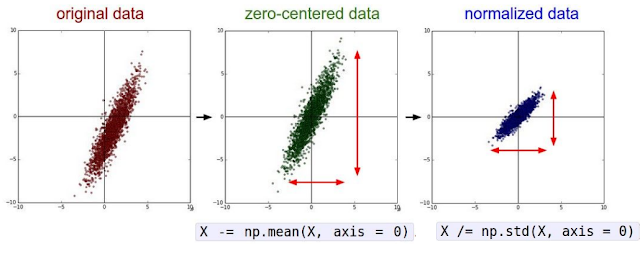

We usually normalize the data before using it, making the data cloud zero-centered and ensuring that all the features have the same variance. This makes it easier to optimize as it becomes less sensitive to small changes in weight. Note that we would use the same exact mean and standard deviation (that we used on the training set) to normalize the test set.

Some standard practices of data pre-processing with image data include:

- Subtract the mean image (used in AlexNet)

- Subtract per-channel mean (mean along each channel, used in VGGNet)

- Subtract per-channel mean and divide by per-channel std (used in ResNet)

Data Augmentation

It is a commonly used technique where we perform image transformations on the training set for increasing both the size and the diversity of labeled training data. Not only is data augmentation used to increase the number of training samples, but it also acts as a form of regularization by adding noise to the training data.

Image augmentations have become a common implicit regularization technique in computer vision tasks to combat overfitting in deep convolutional neural networks. Basic image transformations: flipping, rotating, scaling, blurring, jittering and cropping. It should be noted that only the image transformations that make sense are applied. For example, performing a vertical flip on a car image is never done (as upside-down car images are not found) but the same transformation can be applied to a white blood cell image in a blood smear.

Comments

Post a Comment