Image Classification using CNNs: MNIST dataset

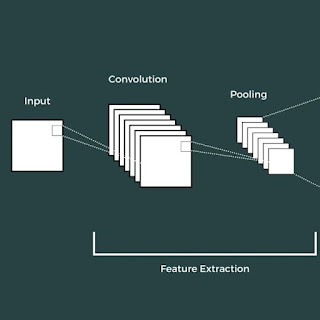

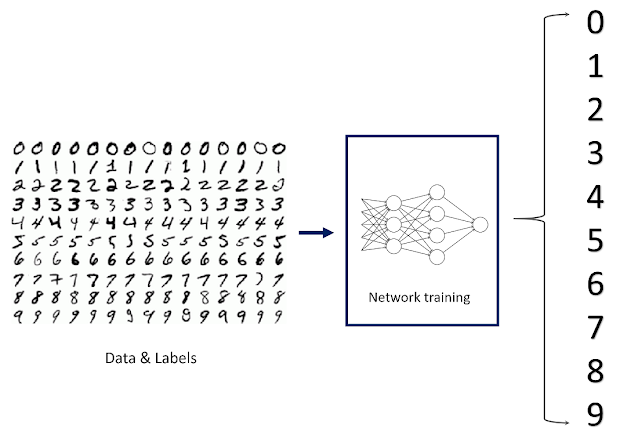

Image classification is a fundamental task in computer vision that attempts to comprehend an entire image as a whole. The goal is to classify the image by assigning it to a specific class label. Typically, image classification refers to images in which only one object appears and is analyzed. Now that we have all the ingredients required to code up our deep learning architecture, let's dive right into creating a model that can classify handwritten digits (MNIST Dataset) using Convolutional Neural Networks from scratch. The MNIST dataset consists of 70,000 $28 \times 28$ black-and-white images of handwritten digits. I will be using Pytorch for this implementation. Don't forget to change the runtime to GPU to get accelerated processing! The first step is to import the relevant libraries that we will be using throughout our code. import torch from torch import nn import torchvision import matplotlib.pyplot as plt Downloading and Pre-processing the Dataset The dataset ...